As a result of the

successful PoC we ran, we detailed our design for Modules and Aggregates in the

Continual Aggregate Hub. And maybe this just is our

Kinder Surprise; 3 things in one:

- Maintainable - Modular, clear and clean functional code as close to business terms as possible. Domain Specific Language.

- Testable - Full test coverage. Exploit test driven development and let the business make test cases as spread sheets.

- Performance - Liner scalable. Cost of HW determine speed, not development time. Too often you start out with nice modules but end up cross cutting the functionality, and chopping up transactions into something that is far from the business terms. That makes a hard system to maintain. We will achieve high performance without rewriting.

This article explain the logical design of the Module that is responsible of Assessment of income tax, the Aggregate that contains the Assessment data, our DSL, deployment issues, and lifetime issues. They represent components of the

CAH. "Assessment of income tax is an type of "Validate consolidate and Fix", and the Assessment data is of type "Fixed values".

We will release the Java Source for this, and I expect that our contractors (Ergo/Bekk) to blogg and talk about the implementation. They too are eager on this subject and will focus in this direction of software design.

Tax Assessment Module

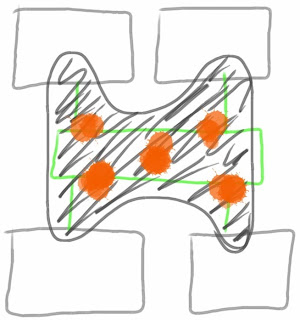

This is a logical view of the Module 'Selvangivelse 2010' handling the tax assessment form and the case handling on it for the year 2010. There will exist one such module for each income year, it consumes supporting xml-documents for the assessment of tax for that year. The Module is where all business logic is and the Modules (of different type) together form the processing chain around the TaxInfo Aggregate Hub. It is layered as defined in DDD and as you see the Aggregate Hub is only present at the bottom - through a Repository - and this also is the boundary between pure Java logic and the "grid infrastructure / scalable cache".

|

| Selvangivelse (Tax form) Module |

The core business logic is in 'SelvangivelseService'. This is where the aggregates from the 'SupportRepo' is extracted to (through an Anti Corruption Layer) and put into the business domain, all business logic is contained and it is where the real consistency checks reside (today there is 3000 assessment rules and 4000 consistency checks). 'SupportRepo' is read-only for this Module and may consist of as many as 47 different schema's. These are supplied by other Modules earlier in the "tax pipeline". 'SelvangivelseService' persist and read from 'SelvangivelseRepo', and makes sure that the last version is consistent or handles manual case handling on it. Tax assessment forms may also be delivered independently by the tax payer (no information about this tax payer exist in the 'SupportRepo'), so it is important to have full audit as to all changes. Tax Calculation is another Module, it is fed by 'SelvangivelseRepo', and for that component it is read-only.

There is also a vertical line and it illustrates that usage of the Selvangivelse (the assessment form) must be deployed separately from producing it (This "read only stack" has been discussed previously, but it is to provide better up-time and to be able to migrate in Assessment forms from the Mainframe without converting the logic.)

Most of the business logic in 'SelvangivelseService' is static (Java construct), so that is does not need any object allocation (it is faster). Object creation is mostly limited to the Entities present in the Aggregates. Limiting the amount of functionality present in the Aggregates that go in the cache, makes the cache more robust as to software upgrades. This may be in conflict with good object orientation, but our findings show a good balance. The though business logic is actually not a concern to the cache-able objects, but is a concern of the Service. In other words a good match for DDD and a high performance system!

Domain Specific Language

We see that maintenance is enhanced by having clear and functional code as close to business terms as possible. Good class names and methods has been used and we believe that this is the right direction. We do not try to foresee changes to the business logic that may appear in the future, risking only to bloat down the business code with some generics we may not need and that hinders understanding. And acknowledge that things have to be rewritten now and then, and must re-factor in the future without affecting historic information or code. (see deployment further down). Note that we have already in the design separated functional areas into Modules, meaning that one years tax assessment handling may be completely different from another.

Our DSL have been demonstrated for business people, and the terms relate to existing literature ("Tax-ABC" is a nasty beast of 1100 pages ;-) ). Business confirm that they can read and understand the code, but we do not expect them to program. We expect them to give us test cases. Close communication is vital, and anyone can define test cases as columns in a spreadsheets (there are worse test scenarios that is not able to be represented as a table, but at least they are a good start and actually covers most of what we have seen in this domain so far.)

|

| Example DSL for summarizing fields in the Tax form |

The DSL approach is more feasible for us than using a Rule Engine. Partly because there are not really that many rules, but data composition, validation and calculations which a normal programming language is so good at. Also by having a clear validation layer/component, the class names and the freedom to program Java actually makes the rule-set more understandable, it does not get so fragmented. The information model is central, that to has to be maintained and flexible. And last but mot least; because of lifetime requirements and other support such as source-handling (eg. github), refactoring and code quality (eg. sonar) is so much more mature and well known in the Java world, that any Rule Engine vendor just cant compete. (Now there certainly are domains where Rule Engine is a good fit, but for our domain, we can wait.)

|

| Logical design of the xml super-document |

Aggregate store: the XML-document

All aggregates are stored in a super-document structure consisting of sections. The content of these sections are generic, except the head. (see

Aggregate Store For the Enterprise Application)

The aggregate has a head that is common for all document types in the TaxInfo database. It defines the main keys and the protocol for exchanging them. It pretty much resembles the header of a message or the key-object of the Aggregate in Domain Driven Design. The main aggregate boundary is defined by who it concerns, who reported the data, the schema type and the legitimate period. In either case it is there as the static long lasting properties of an aggregate in the domain of the CAH. We do not expect the ability to change here, without rebuilding the whole CAH. 'State' is the protocol and as long as the 'state' is 'private', no other module can use it.

The other sections of the document are owned exclusively by the module that produce such content, and belong to the domain the module implements. 'Case' is the state and process information that the module mandates, in the example the document may be 'public' in any of the different phases that tax handling goes through. For example: for a typical tax payer (identified by 'concerns') when an income year is finished there will exist 4 document of this type, each representing phases in the tax process (prognosis, prefilled, delivered and assessed).

The Aggregate section is where the main business information is. All content that is relevant is stored here (also copied, even though is may be present in other supporting documents). This makes the document valid on its own, and must not be put together at query time or for later archiving. Any field may either be registered uniquely in this aggregate, or it may be copied and reference some other document as its master. This is referenced by 'ref GUID' and is used by the business logic in 'SelvangivelseServce' to sew objects in the supporting aggregates together, and create the domain object model of the Module.

The Anomalies section contains validation errors and other defects in the Aggregate, and only concerns this occurrence. We may assess such a document even though it has anomalies, and information here is relevant to the tax payer to give more insight.

The Audit section contain all changes to the aggregate, also automatic handling, to provide insight into what the system has done during assessment. This log contains all changes from the first action in first phase of assessment, not just the present document.

Both Anomalies and Audit can reference any field in the Aggregate.

Aggregates are not stored all of the time, only at specific steps in the business process are things stored as xml. Mostly when legislation state that we must have an official record. For example when we send the pre-filled form to the Tax -payer. The rest of the time the business logic run - either automatic because of events or manual case handling - and update the objects without any persistence at all. Sweet!

Deployment

|

| Logical deployment view |

Every node is functionally equal and has the exact same business logic. To achieve scale the data is partitioned between the different nodes.

This illustration show how the different types of aggregates are partitioned between the different nodes, where the distribution key is to co-locate all aggregates that belong to the same "tax-family". This is transparent for the business logic, and the grid software makes sure that partitioning, jobs, indexes, failover, etc. are handled. By co-locating we know that all data access is local to the VM. This gives high performance. The business logic function even if we have a different distribution key, it only takes more time to complete. Some jobs will span VM's and they will use more time, but Map-Reduce makes sure that most of the work is handled within one VM.

We have learned that memory usage is pretty linear with time usage, and that the business logic is not the main driver. The difference in 10 or 100 rules is much less than 2 or 3 Kb of aggregate size. So fight for effective aggregates, and build clear java business logic!

Lifetime deployment

|

| Where do you draw the line? |

There are deployment challenges as time goes by. Technology will change over time, and at some point we will have a new os, jvm, or new grid software to run this. We have looked into different deployment models, and believe that we have the strategy and tool-set to manage this. At some time we must re-factor big-time and take in new infrastructure software, but we do not what to affect previous years data or logic. We want to handle historic information side-by-side in some Module, and the xml representation of the aggregate is the least common denominator. It is stored in TaxInfo. Modules deployed on different infrastructure must be able to communicate via services. Modules running on an old platform will co-exsist with Modules on a new platform. In these scenarios we do not need high performance, so they need not co-exist in the same Grid, but communicate via WebServices.

In the above mentioned solution space, it is important to distinguish between source and deploy. We may very well have a common source, with forks all over the place if that is necessary. Even though we deploy separate Modules on separate platforms, we can still have control with a common source. (I may get back with some better illustrations on this later.)

Conclusion and challenges

It is now shown that our domain should fit on this new architecture and that the CAH concept hold. We also now think that there has been no better time as to rewriting existing legacy. We have a platform that performs and we can build high level test cases (both constructed and regression test) "brick by brick" until we have full coverage. Also we understand the core of the domain much better now and we should hurry because core knowledge are soon leaving for pension.

This type of design running on a grid platform need not only do so because of performance. It will benefit because the aggregates are stored asynchronously. The persistence logic is out of the business logic, and there will be no need to "save as fast the user has pressed the button". It allows you to think horizontal through the business logic layer, and not vertically as Java architecture sadly has been forcing us for so long.

As presented in

PoC we now have a fantastic possibility to achieve the

Kinder Surprise, but we certainly must have good steering to make a better day.

Kinder Surprise; simpler, cheaper, faster!

Module and Aggregate design in the CAH

Module and Aggregate design in the CAH by

Tormod Varhaugvik is licensed under a

Creative Commons Attribution-ShareAlike 4.0 International License.

Early

in the book Ferguson quotes that some Viking party when having meeting

with some King of England, where reluctant to give an Oath. The agile

view would be that it is first when you get into a situation you know

what to do. You just can't promise that you will act in a certain way,

its depends on the situation.

Early

in the book Ferguson quotes that some Viking party when having meeting

with some King of England, where reluctant to give an Oath. The agile

view would be that it is first when you get into a situation you know

what to do. You just can't promise that you will act in a certain way,

its depends on the situation.