We have a classic IT-landscape with different systems built over the last 30 years, large and small "silos" - mostly serving is own business unit - and file based integration between these. Mainly we have Cobol/DB2 and Oracle/PL-SQL/Forms.

We are challenged by a quite classic situation. How do we serve the public, how do we get a holistic view on the entities we are handling, how do we handle events in more "real time", how do we make maintenance less expensive, how do we get much more responsive to change, how do we ensure that our systems and architecture are maintainable in 2040? Every business which is not greenfield, is in this situation.

We are working with Enterprise Architecture and using TOGAF. Within this we have defined a target architecture (I have written about core elements of this in Concept for a aggregate store and processing architecture), and are about to describe a road map for the next 5-10 years.

Content:

- What's this "silo"?

- What do we expect of the cloud?

- Loose coupling wanted

- Cooperating federated systems

- Complexity vs uptime

- Target architecture main processes

|

| (C) Monthy Pyton - Meaning of Life |

Typically these silos each have a subset of information and functionality that affects the persons and companies that we handle, but getting the overall view is really hard. Putting a classic SOA strategy on top of this is a catastrophe.

Size is not the main classifier though. Some problems are hard and large. We will have large systems just because we have large amounts of state and complex functionality.

What do we expect of the cloud?

|

| Cloud container |

But not all problems or systems gain from running in the cloud. Most of systems built to this day, simply does not run in the cloud. Also data should not "leave our walls", but we can always set up our own or have a more national government approach.

Divide and conquer

|

| Modules and independent state |

The organization that maintains and runs a large system must understand how their system is being used. They must understand the services other depend upon and what SLA's are put on them. Watch out for a strategy where some project "integrates" to these systems, without embedding the system's organization or the system itself. Release handing and stability will not gain from this "make minor" approach. The service is just the tip of the iceberg.

A silo is often a result of unilateral focus on organization. The business unit deploys a solution for its own business processes, and overseeing reuse and the greater business process itself. Dividing such a silo is a lot about governance.

Also you will see that different modules will have different technical requirements. Therefore there may be different IT-architecture for the different systems.

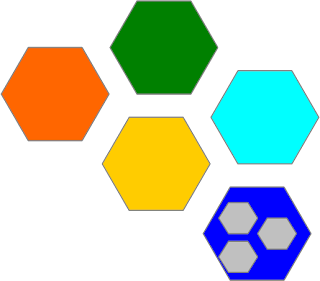

When a module is master in its domain, you must understand the algorithms and the data. If they have independent behavior (can be sharded), it can be paralleled and run in the cloud. In the example the blue module has independent information element. It will probably gain from running in the cloud, but must still cooperate with the yellow and green module.

Cooperating federated systems

|

| Cooperating federated systems |

In the example there are two systems, and they are two systems for good reasons. The producer of ice cream must tell others that a new ice cream is in stock from some date, and it must tell when an ice cream goes out of stock. Furthermore it must answer for the properties of an ice cream. Also there is a process between these two when it comes to ordering, billing and delivery of ice cream. The systems are specialized, but they have a functional dependency and must cooperate. Information must have a common understanding, but the Master mandates. Services and information must be modeled to achieving a common goal, so even though there are defined masters, they must give meaningful and efficient services. User-dialog are web based user interfaces that allow a human to do work. Even though different systems support its own set of dialog's, the user sees them as one.

Complexity vs up-time

|

| Complexity in depth |

Still the systems must cooperate, and there is a flow back as well, with receipts, validation results, and finished results. This is the process protocol that binds these systems together. External systems of course also cooperate with our systems, and we want business processes to span computing domains.

Probably there is more cloud closer to the user (or external systems), but that may depend more on the type of systems than the size of the problem (the system solves).

The silo strategy (the one you would be tempted to take, remember "don't feed it!") would be to put everything into one solution, because functional and data to some extent have a coupling. With the result that up-time is low, sparse maintenance windows, and security is knocking on you door.

Target architecture main processes

|

| Main processes and support systems |

The Party domain handles the Parties. Parties is a collection of physical entities (persons) and legals entities (companies), both domestic and international. The main task is knowing who-is-who (identification), having services for attributes on these entities, and emitting events when relevant things in the real world happen. Relevant events are for instance birth, marriage, movement or death.

Assessment handles many different data elements, and complex validation, assessment, manual case handling and calculations. It collects information about material values, and does assesment and calculations when things change. After calculation it emits events so that the collection process can do its job. More on Assessment in Concept for a aggregate store and processing architecture.

Collection keeps an account for every tax-payer and makes sure that imposed fee is deducted and payed. It also makes sure that the money is distributed to all relevant public parties.

These 3 domains all require (or can exploit) different types of IT-architecture. Party has a steady amount of transaction, but massive querying. We can use the cloud for caching and answering queries. Money is a classical accounting / business suite type of application, where the cloud would not give us much since parallel processing is hard (as for now). Assessment is where we will do our first steps into using the cloud both on transactions and on querying.

Migration strategy and the "cloud" by Tormod Varhaugvik is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Nice informative blog. I found useful information on what is cloud migration and its importance. Thanks for sharing

ReplyDeleteWell written post. I appreciate your guidance for sharing about Cloud. I really need to know about it. Great work!

ReplyDeleteVery good technical process about cloud strategy and migration.

ReplyDeleteCloud Migration

Migrate SharePoint to Office 365

Dynamics 365 Migration

Thank you for your valuable content , Easy to understand and follow. As said, the migration to cloud is very essential for the protection of the database. cloud migration services

ReplyDelete