Continual aggregate hub

In our domain, tax administration, we are working on information systems design for handling our challenges. We collect information about real world assets (“values”) and calculate some fee on them. These assets have multi-dimentional capabilities. Within this information systems design, we define an aggregate-store and processing architecture that can handle large volumes and uptime, deal with rules and regulations that change from year to year, be robust and maintainable with though lifetime requirements, and provide effective parallel processing of independent tax-subjects.

We would like to present this design for you; the continual aggregate hub.

We are inspired of Domain Driven Design, and have used design elements from Tuple Space, BASE, SOA, CQRS-pattern, Operational Data Store (ODS), Integration Patterns, and experience from operating large distributed environments. Definitions from Domain Driven Design are used in the text; Modules, Entities, Aggregates, Services and Repositories.

- Continual; ongoing processing and multi-dimentional information (re-used in different business domains)

- Aggregate; as defined Domain Driven Design, stored as xml-documents

- Hub; one place to share Aggregates

We are inspired of Domain Driven Design, and have used design elements from Tuple Space, BASE, SOA, CQRS-pattern, Operational Data Store (ODS), Integration Patterns, and experience from operating large distributed environments. Definitions from Domain Driven Design are used in the text; Modules, Entities, Aggregates, Services and Repositories.

We believe that other systems with similar characteristics may benefit from the design, and it is a great fit for In-Memory and Big Data platforms.

(January 2013: A Proof of Concept is documented here, and we are now actually building our systems on this design)

(June 2013: This is now i production. BIG DATA advisory)

(January 2013: A Proof of Concept is documented here, and we are now actually building our systems on this design)

(June 2013: This is now i production. BIG DATA advisory)

Concept

|

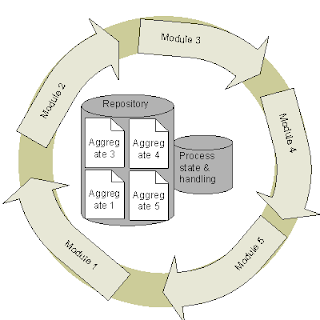

Illustration 1. Continual Data Hub

|

Continual aggregate hub (CAH)

This is a Repository, is the core of the solution and its task is to keep different sets of Aggregates. The repository is aggregate-agnostic. It does not impose any schema on

these; it is up to the producer and consumers to understand the content

(and for them it must be verbose). The only thing the repository

mandates is a common header for all documents. The header describes who the information belongs to, who produced it, what type it is, for what time it is legitimate, and when it was delivered. This means that all Aggregates share these metadata. An Aggregate is data that some Module produced and that is meaningful for the domain the Module handles. The Aggregate is materialized in the Repository as an xml document, to provide flexibility and preserve lifetime requirements. The Aggregate is the product and purpose of the Module that produced it. The Aggregate should be in a state that makes it valid for a period of time, and that is relevant for the domain.

Together with the CAH is a process state store and some sort of process engine. Its task is to hold the event for the produced Aggregate and make sure that subsequent Services are executed. (see Enterprise wide business process handling) This is our soft state and contain high level events that are meaningful outside the Module. These Services may be a Module. This may very well follow the CQRS pattern.

(October 2014: We now know the design of the Case Container within "Process state & handling")

(October 2014: We now know the design of the Case Container within "Process state & handling")

Modules

A Module lives from being fed by Aggregates that has changed since last processing. It may either be one Aggregate or several, it depends on the task and what content the Module needs to do its work. The feeding may either be a live event, batched set at some frequency, or it may feed when it feels like it. When a Module is finished with its task its Aggregate (or several) is sent to the CAH. The Module is the sole owner of its Aggregates and is the only source for it. A Module should ideally have no state other than the Aggregates, and therefore have a temporary life in the processing environment. It may though be a large “silo-sort” of system, that just interacts with the CAH.

(Module and Aggregate Design)

(Module and Aggregate Design)

Aggregates

An Aggregate is some distinct set of data that has loose coupling to other Aggregates, but they may share Entities. Just as the Modules are distinct because they are loosely coupled the data must also be so. Clearly some types of enterprise applications is not suited for this, if the data model is large and there is no clear loose coupling. The Aggregate encapsulates the enterprise data for parallel processing, ensures the lifetime requirements, and makes it easier to handle migration to newer systems. Also the Aggregate may be tagged with security levels so that we have a general way of handling access. Entities should map to objects that have behaviour, and an Aggregate should map to an object structure providing a 1:1 mapping between data and basic behaviour on them. This will also reduce IO and locking as compared to classic OR-mapping. (see Aggregate persistence for the Enterprise Application )

Services

The architecture provides for event driven processes, but the consequence of one event is not necessarily handled real-time. Any Module feeding must be able to consume as best suited, or even re-run if there is a fault in the data produced. And there may very well not be any Module to handle an event, the Module for the task may be introduced later. The events make produced Aggregates form a logical queue and we take ownership to the events (process state). The event must not be hidden within some messaging engine or queue product.

Features

This is continual handling of aggregates, that acts as a bus for its producing and consuming parties, but stores the aggregates for subsequent consumption. Data that anyway would have moved from one module to another (over an ESB), is stored centrally, once. This means that the modules participating in the process does not need to have any permanent store or state itself. This is good because we can exchange one module with another implementation without migrating any data. It also means that things may be repeated (because of errors maybe) and that a data consumer does not depend on the (uptime of) module that produced it (loose coupling). The system that is the producer (owner) of some Aggregate must deliver functionality (some sort of plug-in) to the CAH, so that it can be understood by a consuming system or shown in some GUI.

We believe that uptime requirements and the ability to change and to understand the total system is enhanced with this design. Functionally we isolate the production of an aggregate from the consumption of it, and it will make it easier to reach our uptime requirements. Data is always prepared and ready. The CAH only handles querying and accepting new versions of an aggregate, not producing new aggregates. A major problem with many large systems is that nobody really knows what data the system produces, and we know for sure that a lot intermediate data is stored unnecessary. Also large systems tend to have to many responsibilities and gets tied down. In our proposed architecture, if there is need for a new product (an aggregate), just make a new module and introduce a new aggregate. The data warehouse is an important element in our total architecture, for running reports, because we do not want to mess up our production flow with secondary products. (see CAH and Data Warehouse.html)

We also see that testing is enhanced because we have a defined input and output on a rich functional level, and the modules participating in the process does not need to know about the CAH. For the scaling we see that this nicely handles linear scalability since we now have independent sets of data (aggregates) that are organised on distinct set of owners. Any calculation in the modules does (in most cases) not span different owners of aggregates.

No system is made from scratch and the solution gives legacy systems a chance to participate and its aggregates to have the uptime that is required. With the right interfaces and aggregates it can be connected to the solution, at its own pace. A legacy system may be large, have its own state and use long time. (We here will have a problem with redundancy and things getting out of sync, but we know all previous data and state. So we are better equipped to handle these situations) (see Migration strategy and the cloud)

A module may also include some manual tasks. In this context the task is propagated to a task list (a module handling manual tasks to users). The task points to a module where the human may do its work. It is transparent to the architecture if a task is human or not. Only the module knows. (see CAH architecture.html)

As you may have noticed the process flow is not orchestrated, but choreographed. Experience with the SOA view where everything is process and services, oversees the importance of state and that some set of data must be seen together in a domain. So a major part of handling a process is actually done within the domain (in a module), and there is no loose coupling between the data and the process. So we say respect the modules, and let modules cooperate in getting the enterprise process done. If there is a need for a orchestrated process, build a module for it.

Our domain requirements

Below is our wheel that illustrates a continuous process that acts on information from the outside, and responds back. We seek to respond to events in the real world as they happen, and not later on. Information would be salary being paid to a person, the savings and interest of a bank account, or any IRS-form etc.. This information is collected as Values and they are subject for tax calculation or control purposes. We need to introduce new Values and validations quickly. To enable good quality information we need to immediately respond with a validation result, and maybe also with the tax that should be paid. The wheel must handle different tax domains (inheritance, VAT, income tax…) side-by-side, because many tasks in such a process is generic and information is in many cases common. Also a challenge is the variations in data format and rules (within the same domain) from year to year, so it must offer different handling based on legitimate periods (eg. fiscal year) side-by-side. The wheel only handles information about real objects and the tax imposed on a subject, not about subjects themselves (address or id of persons, companies or organizations), nor the money that is the imposed fee. But of course, these are also processes (systems / modules) that the wheel must cooperate with.

A regular citizen with normal economical life actually does not need to do any actions. We collect and pre-fill everything for them.

On the volume side there is approx 300 million Values on a yearly basis, which result in 20 million tax-forms (Fixed values) and the system must handle 10 years of data (and corresponding logic) because of complaints and detected frauds.

Also we must use less than 2 weeks on putting a new type of Value into production.

|

| Illustration 2. CAH Tax domain |

Processing Modules

| |

Reporter channel

|

Any subject that report about other subjects they are handling. Such as employers, payroll systems, bank systems or stock trading systems. These report directly to us. Information is stored as Values ("assets" from the real world). This Module is highly generic, but will have different types of validation.

|

Self Service channel

|

Any subject that either wants to look at information reported on themselves, or wants to report own data. Own data may be from systems or via forms on the web. Information is stored as Values. This Module is highly generic, but will have different types of validation.

|

Validate, consolidate and fix

|

Information stored in Values are aggregated and fixed after a validation process where the legitimacy of the information is done. There are many groups of fixation; income, savings, property, etc.

There will exist Modules for each tax domain, and they will be quite complex and involve manual handling.

The product (aggregate) of this step is relevant for self service Module so that the subject can object to the result, and claim other Values than the ones collected.

|

Fee calculation

|

A fee is calculated based on the Fixed values. The Module produces the Imposed fee.

This Module will exist in many flavours, each for different tax domains.

|

Deduct

|

This module interacts with the accounting system and produce the Deducted amount. Most subjects have prepaid tax directly from their employers or pay some pre-filled amount during the year.

Also at this step is the production of a tax-card back to the payroll systems instructing them to change tax-percentage if necessary.

|

CAH

| |

TaxInfo

|

The “Tuple Space” Repository with Aggregates. All aggregates have this in common: who the information belongs to (the tax subject), who produced it, what type it is (a reference to an xsd maybe), for what time it is legitimate (eg. Fiscal year or period), and when it was created. An aggregate is never changed but comes in new versions.

TaxInfo should internally have a high degree in partitioning freedom so that we can scale “out of the box”.

|

Process state & handling

|

Soft state, event log and choreography.

The Process state and its xml-documents are structured and referenced in the design of the Case Container. |

Concept for a aggregate-store and processing architecture by Tormod Varhaugvik is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.